Probability Theory - Medical Biotechnology

Overview

Welcome to my course on Probability Theory for first-year students of Master’s program “Medical Biotechnology” at MIPT.

This is the first part of the course. This semester will be devoted to the study of discrete probability theory, incluiding introductory notions of random variables and the way of computing their expected value, variance and correlation coefficient. In addition, the Markov and Tchebyshev inequalities will be introduced and we will prove the Tchebyshev's form of the weak law of large numbers.

Next semester, we will generalize the concept of a random variable as a measurable function. We will extensively use the CDF and the PDF of random variables to compute all their interesting numerical characteristics. As a whole, we will completely construct the concept of a propability space. We will conclude our course with the proof of one of the main results in probability theory - the central limit theorem.

Prerequisities

Basic set theory, basic measure theory, calculus, mathematical analysis, combinatorics. Basically, all material from pages 1- 57 of the book "Introduction to probability theory for data science" by Stanley H. Chan.

Problem sets

Problem set 1. [solutions] Combinatorics

Problem set 2. [solutions] Classical probability

Problem set 3. [solutions] Geometric probability. Bernoulli scheme and independence.

Problem set 4. [solutions] Conditional probability. Bayes Formula.

Problem set 5. [solutions] Discrete random variables. Probability mass function.

Problem set 6. [solutions] Expected value and Variance. Covariance.

Problem set 7. Discrete joint probability distributions.

Problem set 8. [solutions] De Moivre–Laplace theorem.

Attendance & Marks

Topics discussed in lectures

Lecture 1. [06/10] Basics of combinatorics. Rule of sum and rule of product. Pigeon-hole principle. Permutations and combinations. Binomial and Multinomial theorem. Combinatorial identities (7 formulas). Inclusion-exclusion principle with proof.

Lecture 2. [13/10] Random experiments and notion of probability. Probability in nature. Probability triple. Discrete sample space. Classical probability model. Bernoulli scheme. k successes in n trials. Sum of all outcomes gives 1. Geometric probability. Probability of two people meeting.

Lecture 3. [20/10] Probability of succes in first trial. Probability triple. Definition and examples of sigma-algebras. Algebra which is not a sigma-algebra. Measurable space. Probability measure-definition and properties. Continuity of probability. Probabilistic principle of inclusion-exclusion.

Lecture 4. [27/10] Conditional probability. Theorem of multiplication. Independence of events (pairwise and mutually). Example of pairwise but not necessarily mutually independent events. Example on the neccesary conditions for mutually independence. Partition of Omega. Total Probability Formula

Lecture 5. [03/11] Proof of total probability formula. Bayes Theorem. Problem of the blood test. Notion of random variables. Number of successes in Bernoulli scheme as a random variable. Measurable function. Borel sigma-algebra. Probability Mass Function (PMF). Independence of discrete random variables. Examples of discrete distributions (Bern(p), Bin(n,p), Geom(p), Pois(λ)).

Lecture 6. [10/11] Poisson limit theorem. Convolution for discrete random variables. Expected value of a random variable. Variance.

Lecture 7. [17/11] Properties of the Expected value with proofs (5). Variance. Properties with proofs (3).

Test 1 [24/11]

Lecture 8. [06/12] Covariance. Properties of covariance. Example of dependent random variables with zero covariance. Variance of sum of n random variables. Correlation coefficient. Theorem on |corr(x,y)| <=1.

Lecture 9. [13/12] |corr(x,y)|=1 (meaning). Notion of random graphs. P(G(n,p) has m edges). Expected value of number of triangles. Markov's and Chebyshev's inequalities. Application of inequalities for finding number of triangles in G(n,p).

Lecture 10. [15/12] Couchy- Bunyakovsky inequality. Proving |corr(x,y)| <=1 with Couchy- Bunyakovsky inequality. Chebyshev's form of WLLN. Generalization of WLLN.

Lecture 11. [22/12] Discrete joint distributions. Properties. Joint distribution table. Marginal distributions. Covariance matrix.

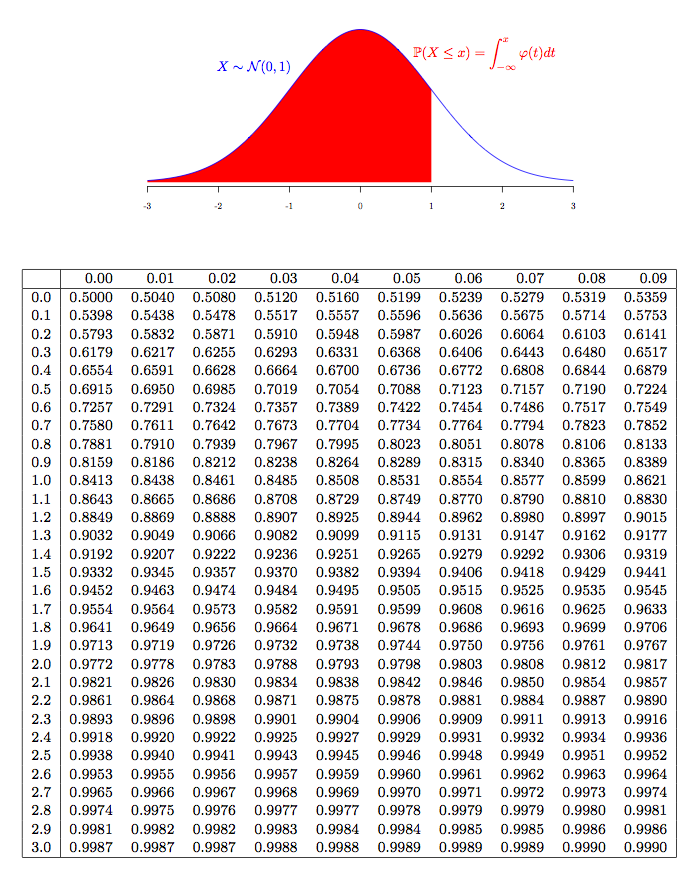

Lecture 12. [27/12] Local Moivre-Laplace Theorem. Integral Moivre-Laplace theorem.

Course guidelines and grading system

At the end of this course, you will get a grade from 1 to 10 (you need at least 3 to pass) according to the following parameters:

- Max of 1 point for class attendance (1 if you missed no more than 2. And o,5 if you missed no more than 3)

- Max of 3 points for Test 1 [24/11]

- Max of 3 points for Test 2 [January]

- Max of 3 points for final exam on theory [January]

IMPORTANT: You must pass the exam on theory in order to pass the course.

Test 1 and 2 are closed-book, that is, you are not allowed to use any material. On the other hand, at the the theoretical exam, while preparing your solutions (and only at this moment), you are permitted to use any written material (lecture and seminar notes, printed notes or books) but not electronic devices (phones, laptops). In both cases, it is not permitted to help in any way to your classmates.

The number of points you get for each activity, is either an integer x or x+0.5.

If your final grade (after the final exam) is not an integer (z+0,5), you can solve an extra problem to raise your grade to z+1. Otherwise you get just z.

Every week, after class, I will upload a new homework. Each of you have to solve (or at least try to solve) these problems. I will randomly choose one of you during to seminar so that you can explain to everyone your solution.

Syllabus

- Basics of combinatorics. Rule of sum and rule of product. Pigeon-hole principle. Permutations and combinations. Binomial and Multinomial theorem. Combinatorial identities (7 formulas).

- Inclusion-exclusion principle. Random experiments and notion of probability. Probability in nature. Probability triple. Discrete sample space. Classical probability model.

- Bernoulli scheme. k successes in n trials. Sum of all outcomes gives 1. Geometric probability. Probability of two people meeting.

- Probability of getting success in first trial. Definition and examples of sigma-algebras. Algebra which is not a sigma-algebra. Measurable space. Probability measure-definition and properties.

- Continuity of the probability measure. Probability of union of intersecting events and upper bound. Conditional probability. Theorem of multiplication. Independence of events (pairwise and mutually). Example of pairwise but not necessarily mutually independent events. Example on the neccesary conditions for mutually independence.

- Partition of Omega. Total Probability Formula. Bayes Theorem. Monty-Hall problem. Problem on choosing an easy ticket. Notion of random variables. Number of successes in Bernoulli scheme as a random variable. Measurable function. Borel sigma-algebra. Probability Mass Function (PMF).

- Independence of discrete random variables. Examples of discrete distributions (Bern(p), Bin(n,p), Geom(p), Pois(λ)). Expected value of a random variable. Problem on choosing an easy question at the exam.

- Properties of the Expected value with proofs (5). Variance. Properties with proofs (3). Covariance. Example of dependent random variables with zero covariance.

- Expected value and variance of random variables with distribution Ber(p), U({1,...,n}), Bin(n,p), Pois(λ). Properties of covariance (5). Variance of sum of n random variables. Correlation coefficient, definition and meaning. |corr(x,y)|<=1. Proof 1.

- Notion of random graphs. Markov's inequality. Сhebyshev inequality. Cauchy–Bunyakovsky inequality. Application in the proof of the value of the correlation coefficient.

- Weak Law of large numbers. General form of WLLN. Joint distribution of two random variables. Properties. Random walk.

- De Moivre-Laplace Local limit Theorem. De Moivre-Laplace Local limit Theorem.

Recommended literature

- Probability (Graduate Texts in Mathematics) 2nd Edition - Albert N. Shiryaev.

- Introduction to probability for Data Science - Stanley H. Shan. [download]

- Probability and Statistics for Data Science - Carlos Fernandez-Granda.

- Introduction To Probability - Joseph K. Blitzstein, Jessica Hwang.

- Мера и интеграл, Дьяченко М.И.

- Курс теории вероятностей и математической статистики, Севастьянов Б.A.

- Курс теории вероятностей, Чистяков В.П.

Recommended extra material

- Short lectures on measure theory: [playlist]

- Short lectures on Probability Theory [playlist]

- Probability theory course IMPA [playlist]

- Probability theory course Harvard University [playlist]

- Interactive videos on probability from 3Blue1Brown [video]

- Lectures in introduction to probability (in russian) [playlist]